CUSTOM SOLUTIONS AND CUSTOM REQUIREMENTS CAN BE FULFILLED.

I have 4+ experience in Data driven development, Data Ops, DevOps, and Data modelling. Can deploy open source or managed applications and write python code as well.

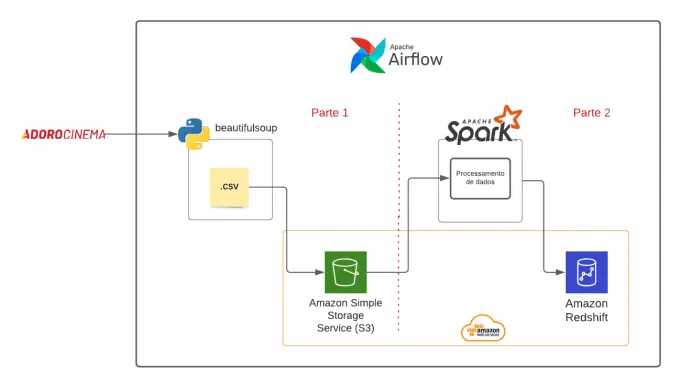

-Will write and test pyspark code in Python and provide notebook

-ETL jobs in pyspark using RDDs, Dataframes, Spark SQL

-Data Exraction from any custom source, such as AWS S3, API, Azure Storage,GCP, Notebooks

-Support deployment on AWS, Azure, Databricks, GCP

-Data Cleaning in pandas and pyspark

-Deploy airflow on cloud and create dags

-connect dbt to airflow

-Python custom code using selenium web driver, web scraping, etc.

-ETL Pipelines using AWS EMR, AWS Glue, GCP Cloud Composer, Dataflow, etc.

-Deploy and configure LakeFS

-Have also worked on commoncrawl

For custom orders, you can consult me, would always love to work on custom orders and requirements. I love ETL and can get it done for you. Price is negotiable.

top of page

$50.00Price

bottom of page